Enhancing image resolution through machine learning has become a popular approach for restoring degraded image files or improving visual quality. Tools like Chainner and frameworks like ONNX make this process more efficient and scalable. However, errors can occasionally arise during model inference, especially when working with exported ONNX models. One such error developers encounter is:

[ONNXRuntimeError] : 1 : FAIL : Non-zero status code returned while running FusedMatMul node.

Name:'/layers.0/blocks.2/attn/attns.0/MatMul_FusedMatMulAndScale'

Status Message: matmul_helper.h:142 onnxruntime::MatMulComputeHelper::Compute left operand cannot broadcast on dim 1

This article provides an overview of Chainner, ONNX, and steps to troubleshoot this error effectively.

Chainner is an open-source neural network framework designed for building and training deep learning models. Its lightweight and modular architecture makes it easy to integrate into projects focused on image processing, classification, and resolution enhancement. Chainner offers flexibility for customization and deployment, making it a popular choice for image restoration tasks.

Understanding ONNX

ONNX (Open Neural Network Exchange) is an open standard format that facilitates the transfer of machine learning models between different frameworks. By exporting models to ONNX, developers can deploy and run them across various environments and devices, regardless of the original training framework (such as PyTorch or TensorFlow).

The ONNX Runtime is a high-performance engine designed to execute ONNX models, providing CPU and GPU acceleration for fast inference. It is optimized for production environments, supporting a wide range of neural network operations.

Context of the Error

In the context of enhancing image resolution, developers often train super-resolution models using PyTorch or TensorFlow and export them to ONNX for faster inference. When running the model through Chainner, the above error may occur during matrix multiplication (MatMul) operations. This issue typically arises when the dimensions of the input tensors do not match, leading to a broadcasting failure.

Why This Happens

The error message indicates that the left operand of the matrix multiplication cannot be broadcast to match the expected shape. Broadcasting is a mechanism that allows tensors with different shapes to be multiplied by automatically expanding their dimensions. However, if the dimensions are incompatible (particularly in dimension 1), the operation fails.

Steps to Troubleshoot and Fix the Error

Verify Model Input Size

- Ensure that the input image resolution or tensor size aligns with the expected input dimensions of the model.

- Check the model documentation or training script to confirm the required input shape.

Inspect Tensor Shapes

- Print and log the shapes of tensors just before the FusedMatMul node to identify mismatches.

- Use debugging tools to visualize the tensor dimensions during runtime.

Preprocessing Consistency

- Ensure that the image preprocessing pipeline (resizing, normalization, etc.) is consistent with the model's training pipeline.

- Mismatched preprocessing steps can lead to unexpected tensor shapes.

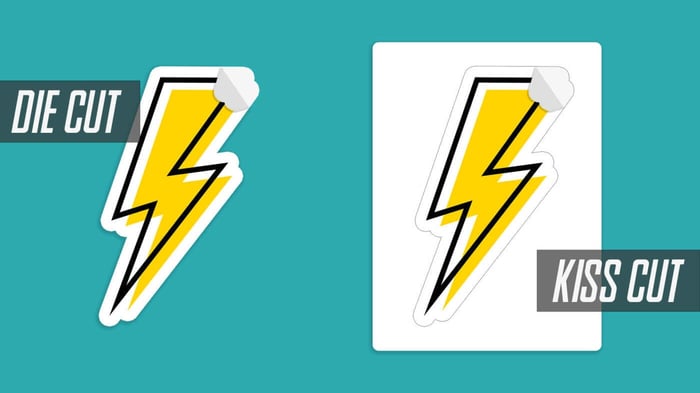

Export Model with Dynamic Axes

- When exporting the model to ONNX, specify dynamic axes for input tensors to allow flexible input sizes. This prevents dimension mismatches during inference.

- Example:

torch.onnx.export(model, dummy_input, 'model.onnx', dynamic_axes={'input': {0: 'batch_size', 2: 'height', 3: 'width'}})

ONNX Model Inspection

- Use tools like Netron to visualize the ONNX model and check the tensor shapes at each layer.

- Identify layers where the shape mismatch occurs and modify the model or input accordingly.

Fallback to Fixed Input Size

- If dynamic input sizes are not essential, consider resizing input images to a fixed shape compatible with the model’s requirements.

Wrapping Up

Errors like the FusedMatMul broadcasting issue can disrupt workflows, but with systematic debugging and validation, they can be resolved. By ensuring consistent preprocessing, verifying input shapes, and using dynamic axes during model export, developers can enhance image resolution without encountering matrix multiplication failures.

Leveraging Chainner and ONNX effectively requires careful attention to model architecture and input pipelines, but the results can lead to significant improvements in image quality and processing efficiency.

Additional Detail

[ONNXRuntimeError] : 1 : FAIL : Non-zero status code returned while running FusedMatMul node. Name:'/layers.0/blocks.2/attn/attns.0/MatMul_FusedMatMulAndScale' Status Message: matmul_helper.h:142 onnxruntime::MatMulComputeHelper::Compute left operand cannot broadcast on dim 1

This error message indicates that the shapes of the matrices (tensors) being multiplied do not match properly for the FusedMatMul operation. Specifically, ONNXRuntime is complaining that it cannot “broadcast” (or automatically expand) the shape of the left operand along dimension 1.

In simpler terms, matrix multiplication expects compatible dimensions; for example, when doing a 2D multiplication, if the left matrix has shape (M, K) and the right matrix has shape (K, N), then multiplication can proceed, resulting in shape (M, N). In your case, there is a mismatch along one of the dimensions (dimension 1). ONNXRuntime cannot automatically adjust or expand the left tensor’s dimension to match what the operation requires.

To fix this problem, you need to make sure the input tensors have the correct shapes for matrix multiplication. Check the shapes of the inputs to that FusedMatMul node and ensure they are compatible before performing the operation.